In 2010 owners of the largest facial database in the world — Facebook — learned how to distinguish a portrait from a landscape: the social network searched for faces in photos and tagged these areas. Sometimes it made mistakes. Four years later Facebook could tell with 97% accuracy who was depicted in a photo: one person or two different people.

This a major advance for Facebook but its algorithm still loses out to human brain in three percent of such cases. If somebody asks us to recognize a familiar person in bad-resolution photos we’ll do it better than computers. Even if these images were taken from an unusual angle.

This is uncommon as usually computers are more accurate than human beings. Why are we better at solving such challenges and how computers try to do the same?

Our brains went through a serious training

It has emerged that a certain brain area is solely devoted to facial recognition. It’s called the fusiform gyrus and it is a part of the temporal lobe and occipital lobe. Human beings learn to distinguish faces from the time of their birth — infants develop this skill in the first days of their life. At as early as four months, babies’ brains already distinguishes one uncle from another — and aunties as well, of course.

Eyes, cheekbones, nose, mouth and eyebrows are the key face features that help us to recognize each other. Skin is also important, especially its texture and color. It’s noteworthy, that our brain tends to process a face as a whole — mostly it doesn’t focus on individual features. This is why we can easily recognize people even if they hide half of their face below a scarf or a piece of paper. However, if somebody makes a simple collage and joins faces of two famous people, viewers might need some time to understand who is who in a picture.

This is what you’ll see if you combine Brad Pitt’s and Angelina Jolie’s portraits

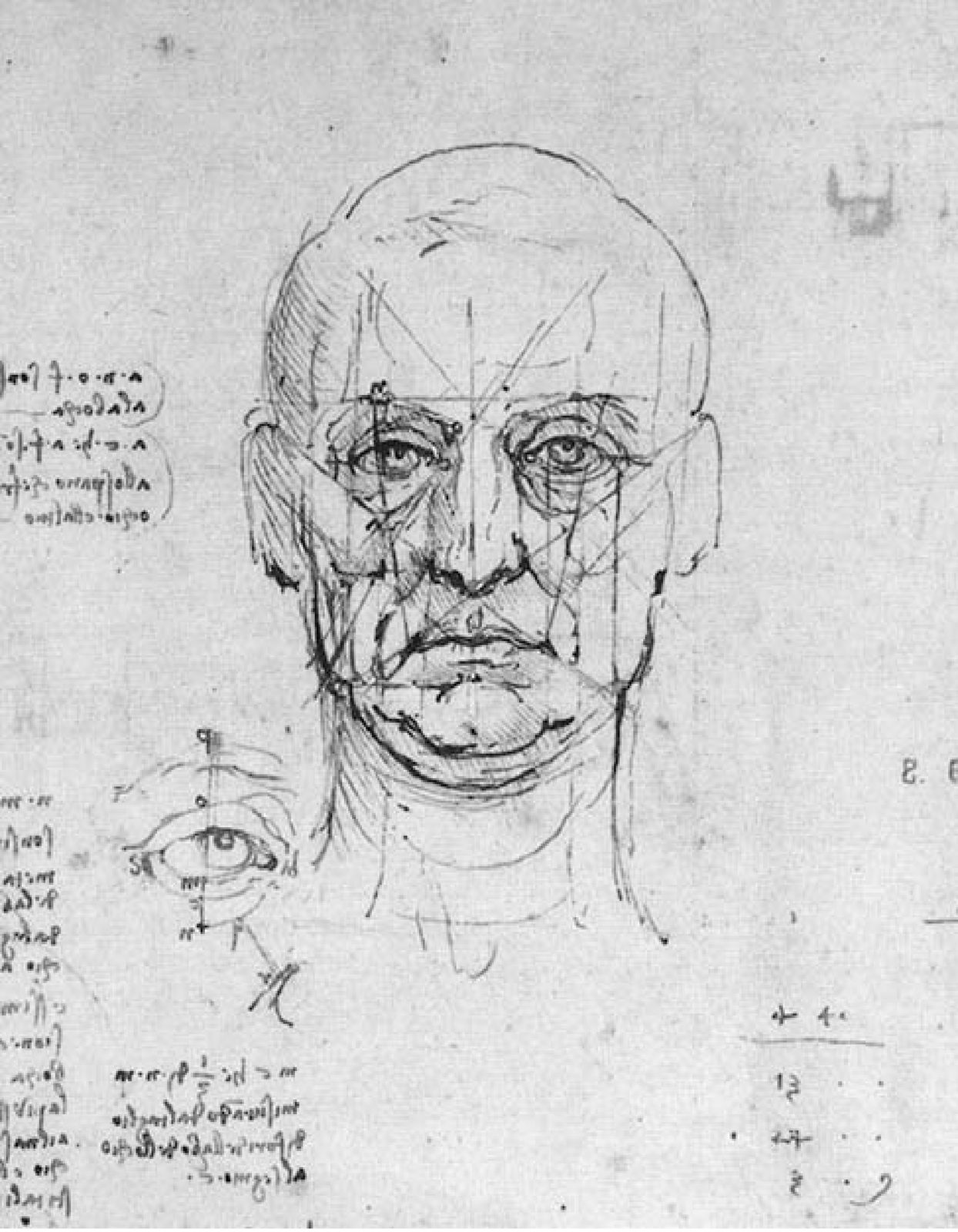

Since birth our brain stores faces. We gradually create a general template and use it for facial processing. If one could draw this template it might look like this:

Facial processing is going on at a time when our brain compares person’s appearance with an internal template: if the person’s nose is wider, lips are more plump, the skin tone is warm or cold, etc. Those of us who rarely travel say sometimes that people of other races look very similar. They think so because their templates are “sensitized” to facial features, common for their surrounding.

By the way, some animals can distinguish faces as well like dogs and monkeys. Though sniffing gives them a lot of useful information, visual images also help these animals to recognize other living creatures. What’s interesting, man’s best friends — dogs — not only easily understand our mood by looking at our faces, they can also learn how to smile as well.

How does a computer recognize faces?

What is the connection between human smiles and facial processing? These two are almost inseparable as any expression changes our faces beyond recognition, especially for computer algorithms.

Software can compare two front-facing facial portraits and determine if they depict one and the same person. These solutions work quite like portrait-painters: they analyze so-called nodal points on human faces. These points are used to determine our individual faces; different methods find from 80 to 150 nodal points on a single face.

For example, artists and software both measure distance between eyes, width of the nose, depth of the eye sockets, the shape of the cheekbones, the length of the jaw line and so on.

When you change the eye level or ask the model to turn their head, these measurements change. As many facial processing algorithms analyze the images only in two-dimensional space, point of sight is crucial for accurate recognition. Do you want to stay incognito? Hide your eyes and cheekbones behind sunglasses and cover your chin and mouth with a scarf to preserve anonymity. When we tested the scandalous FindFace service, it was able to recognize models only on front-facing portraits.

This is how you can fool facial recognition services, which work with “flat images.” However, the morning sun never lasts a day and more progressive algorithms are already under way.

What’s next?

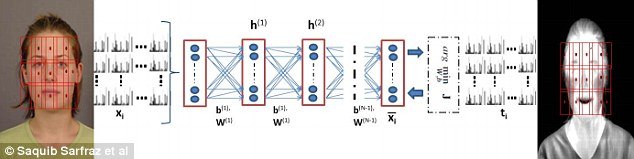

Our brain trains to process faces as we grow up. The ability to distinguish between “us” and “them” is one of the essential skills necessary for survival. Modern computers can learn like humans and program themselves. To improve results of machine facial processing, developers use self-learning algorithms, and feed them hundreds of human portraits as a schoolbook. It’s not hard to find these images — there are a lot of them online, on social medias, photo hosting sites, photo stocks and other web-resources.

You can’t replace your face, says facial recognition – https://t.co/tW6vdmxPWE pic.twitter.com/dKXmOVdJ33

— Kaspersky (@kaspersky) April 22, 2016

Facial-based identification became more efficient when algorithms started working with 3-D models. Projecting a grid onto the face and integrating video capture of human head software understands how this person looks from different angles. By the way, templates in human brains are also three-dimensional. Though this technology is still under development one can already find several proprietary solutions on the market.

Mimic studies also gain traction. Realistic rendering of emotions is a gold mine for video gaming industry and a great number of companies work hard to make their characters more and more convincing. Important steps in this direction are already taken. The same technology will be of great service to facial recognition software — when these solutions wade through human mimics, they will know that this funny smiley in photo is probably pulled by that young girl on the street.

Apart from 3D models, developers work on other angles, for example, Identix company created a biometrics technology for face recognition called FaceIt Argus. It analyzes the uniqueness of skin texture: lines, pores, scars and other things like that. FaceIt Argus creators claim that their development can identify differences between identical twins, which is not yet possible using facial recognition software alone.

This system is said to be insensitive to changes in facial expression (like blinking, frowning or smiling) and has the ability to compensate for mustache or beard growth and the appearance of eyeglasses. Accurate identification can be increased by 20 to 25 percent if FaceIt Argus is used together with other facial processing systems. On the other hand, this technology fails if you use low-resolution images taken at low light.

Anyway, to cover that eventuality there is other technology. Computer scientists at the Karlsruhe Institute of Technology (Germany) have developed the new technique, which recognizes infrared portraits of people, taken at poor lighting or even in total darkness.

This technology analyzes human thermal signatures and matches their mid or far-infrared images with ordinary photos with 80% accuracy at maximum. The bigger number of images is available, the more successfully the algorithm works. When only one visible image is available, the accuracy drops to 55%.

Making such a match is not as easy as it might seem at first glance: the thing is that there’s no linear correlations between faces in regular and infrared light. The image, which is build on the base of thermal emissions, looks quite different from a regular portrait taken in daylight.

The intensity of thermal emissions depends a lot on skin and environment temperatures and even on person’s mood. Besides, usually infrared images have lower resolution than regular photos, which only makes the task more difficult.

To solve this problem, scientists turned to machine learning algorithm and “fed” their system with 1586 photos of 82 people.

It’s everywhere!

Nowadays, facial recognition technologies are used almost all over the world. Recently Uber rolled out similar solution in China to keep control of its own taxi drivers. NEC and Microsoft combine facial procession and IoT to let marketing specialists get to know their clients better, and better and even better. At the same time, trolls from Russian 2ch.ru forum use a facial recognition service to attack porn actresses online.

#Trolls expose #porn stars social networking accounts https://t.co/2mY8kh0JlJ #socialmedia pic.twitter.com/2tejy4TDZ9

— Kaspersky (@kaspersky) April 22, 2016

The development of facial recognition technology will make us rethink everything we know about privacy. It will not happen today or even in a year, but it’s already high time to get ready. After all, you can’t replace you face, can you?

If you wonder what can be the outcome of technology invasion of privacy, we recommend you to watch the British mini-series “Black mirror,” especially the “Fifteen Million Merits” episode.

algorithms

algorithms